LeonShangguan

从stable-baseline3的源代码来看主要是

if entropy is not None:

entropy_loss = -th.mean(entropy)

进一步找代码可以看到,实际上返回的entropy是

distribution = self._get_action_dist_from_latent(latent_pi, latent_sde)

return distribution.entropy()

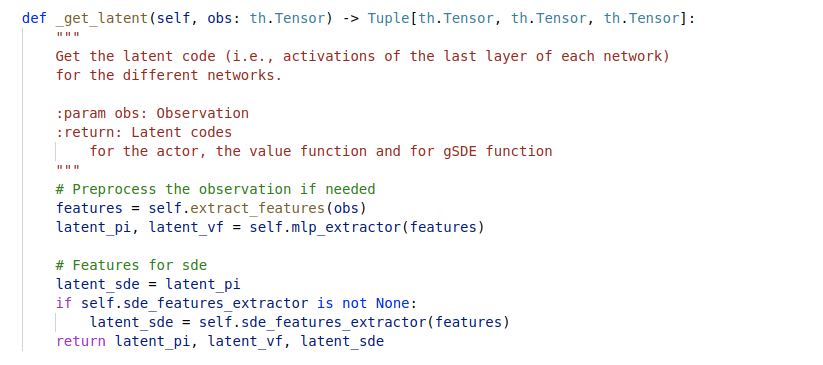

注意,其中的latent_pi和latent_sde是指:

也就是两个变量之间的熵

def _get_action_dist_from_latent(self, latent_pi: th.Tensor, latent_sde: Optional[th.Tensor] = None) -> Distribution:

"""

Retrieve action distribution given the latent codes.

:param latent_pi: Latent code for the actor

:param latent_sde: Latent code for the gSDE exploration function

:return: Action distribution

"""

mean_actions = self.action_net(latent_pi)

if isinstance(self.action_dist, DiagGaussianDistribution):

return self.action_dist.proba_distribution(mean_actions, self.log_std)

elif isinstance(self.action_dist, CategoricalDistribution):

# Here mean_actions are the logits before the softmax

return self.action_dist.proba_distribution(action_logits=mean_actions)

elif isinstance(self.action_dist, MultiCategoricalDistribution):

# Here mean_actions are the flattened logits

return self.action_dist.proba_distribution(action_logits=mean_actions)

elif isinstance(self.action_dist, BernoulliDistribution):

# Here mean_actions are the logits (before rounding to get the binary actions)

return self.action_dist.proba_distribution(action_logits=mean_actions)

elif isinstance(self.action_dist, StateDependentNoiseDistribution):

return self.action_dist.proba_distribution(mean_actions, self.log_std, latent_sde)

else:

raise ValueError("Invalid action distribution")